digiKam 7.0.0-beta1 is released

Dear digiKam fans and users,

Just in time to get you into the holiday spirit, we are now proud to release digiKam 7.0.0-beta1 today. This first version start the test stages for next 7.x series planing while next year. Have a look at some of the highlights listed below, to discover all those changes in detail.

Deep-Learning Powered Faces Management

While many years, digiKam has provided an important feature dedicated to detect and recognized faces in photo. The algorithms used in background (not based on deep learning) was older and unchanged since the first revision including this feature (digiKam 2.0.0). It was not enough powerful to facilitate the faces management workflow automatically.

Until now, the complex methodologies to analyze image contents in goal to isolate and tags people faces, was performed using the classical feature-based Cascade Classifier from the OpenCV library. This older way works but do not provide an high level of true positive results. Faces detection was able to give 80% of good results while analysis, which is not too bad but requires a lots of users feedback to confirm or not the detected faces. Also, with the user feedbacks from bugzilla, the Faces Recognition do not provides a good experience in auto-tags mechanism for people.

While summer 2017, we have mentored a first student named Yingjie Liu to work on Neural Network integration in Face management pipeline based on Dlib library. The result was mostly demonstrative and very experimental, with a poor computation speed results. This can be considered as a technical proof of concept, but not usable in production. The approach to resolve the problem has take a wrong way, and this is why the deep learning option in Face management have never been activated. We have try again this year, and a complete rewrite of these codes have been done with success by a new student named Thanh Trung Dinh.

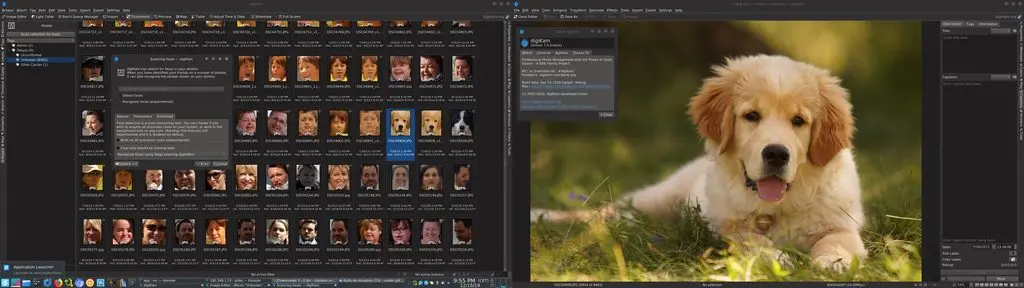

The Goal of project was to left all these techniques and to port the detection and the recognition engine on more modern deep-learning approaches. The new codes based on recent Deep Neural Network feature from OpenCV library, use neuronal networks with pre-learned data model dedicated for the faces management. No learning stage was required to perform faces detection and recognition. We have gain time to code, run-time speed, and a better level of success rate which reach 97% of true positive. Another one is to be able to detect non-human faces, as dog like you can see in this screenshot.

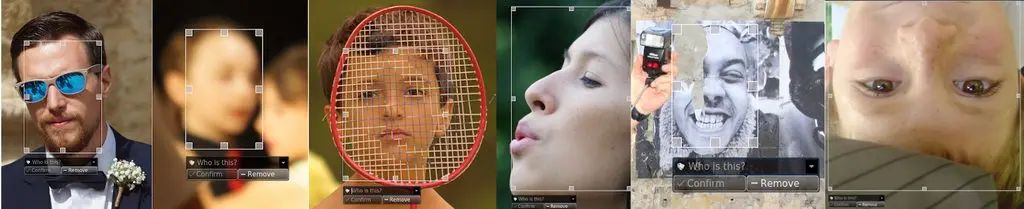

But there are more improvements about face detection. The neural network model that we use is really a good one as it can detected blurred face, covered faces, profile faces, printed faces, returned faces, partial faces, etc. The results processed over huge collections give excellent results with a low level of false positive items. See below the examples of face detection challenges performed by the neural network:

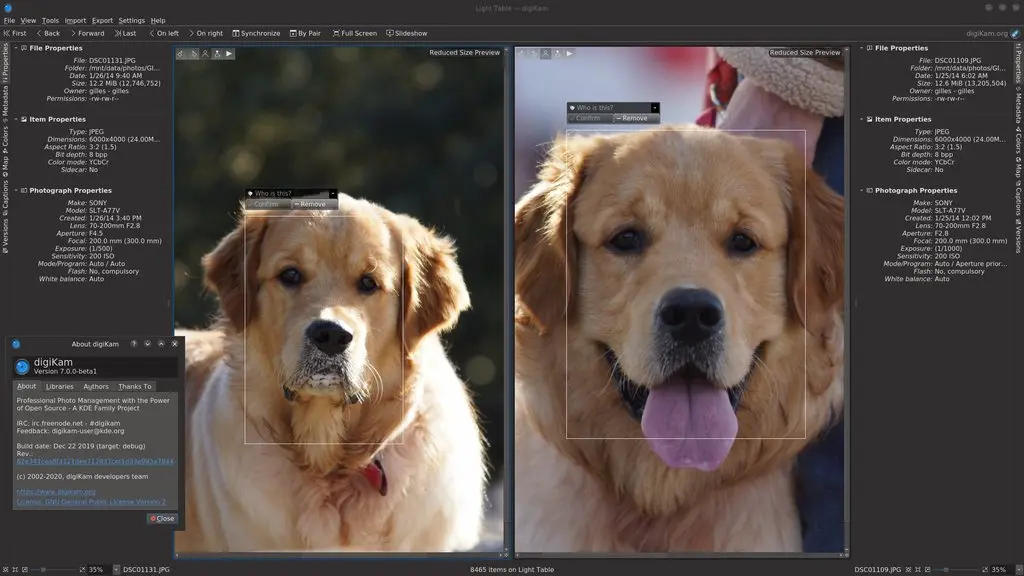

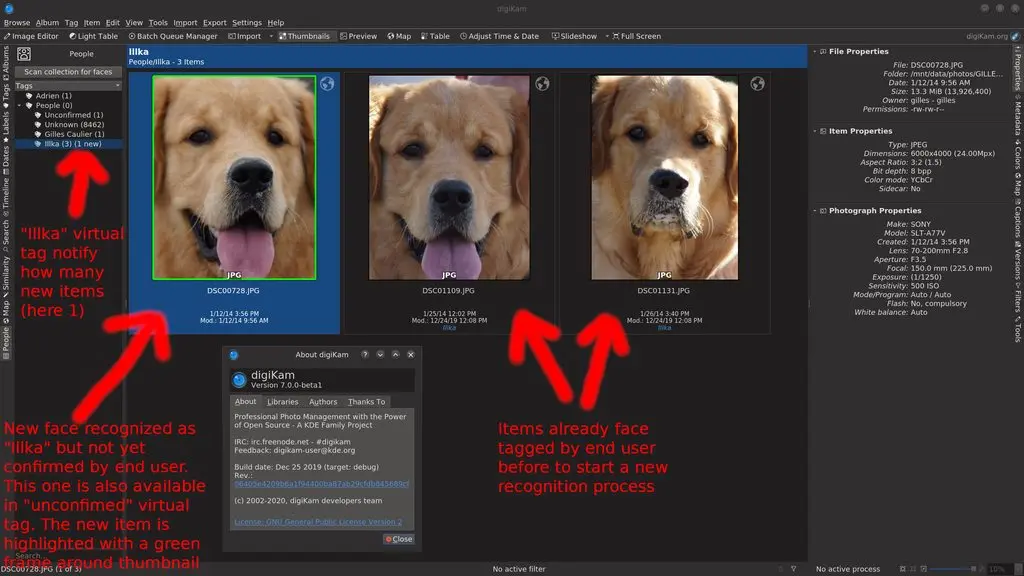

About the Recognition, the workflow still the same than previous versions but it include few improvements. You need to teach to the neural network some faces to automatically recognize on the collection. User must tag some images with the same person and run the recognition process. The neural network will parse the faces already detected as unknown and compare to ones already tagged. If new items are recognized, the automatic workflow will highlight new faces with a green border around thumbnail and will report how many new items are registered in face-tag. See the screenshot below taken while a faces recognition.

Recognition can start to work with just one face tagged, where at least 6 items was necessary to obtain results with previous algorithms. But of course, if more than one faces are already tagged, recognition will have more chances to give good results. The true positive measurements about recognition with deep learning is really excellent and raise to 95%, where older algorithms cannot be able to reach 75% in better cases. Recognition include also a Sensitivity/Specificity settings to tune results accuracy, but we tip to lets default settings in first to experiment this feature over your collection.

About performance, all is better than previous versions as implementations supports multi-cores to speed-up computations. We have also work hard to fix serious and complex memory leaks in the face management pipeline. This hack was take many months to be completed as the dysfunctions was very difficult to reproduce. You can read the long story from this bugzilla entry. Resolving this issue permit to close a long list of older reports around Face Management.

For the future, the new code structure will permit to use other pre-learned data model, to detect and recognize forms in images as monuments, plants, annimals, The difficulty is to found the pre-learned data adapted for the neural network. Writing codes to teach no-human forms will be a time consuming task. The neural network learning stage is complex and very sensible to errors.

To complete the project, Thanh Trung Dinh has presented the new deep learning faces management to Akademy 2019 in September at Milan. The talk was recorded and available here as video stream.

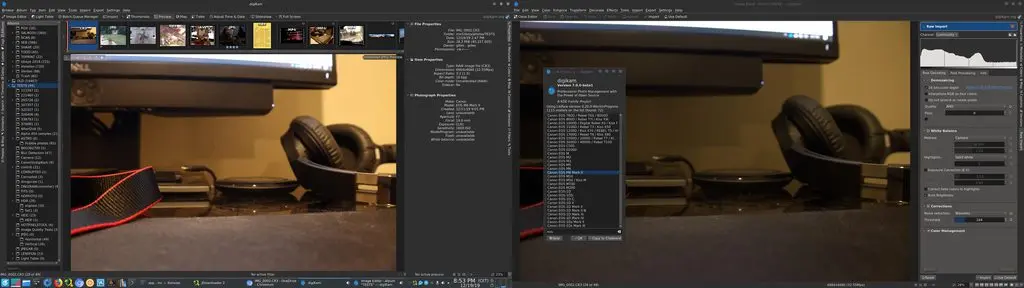

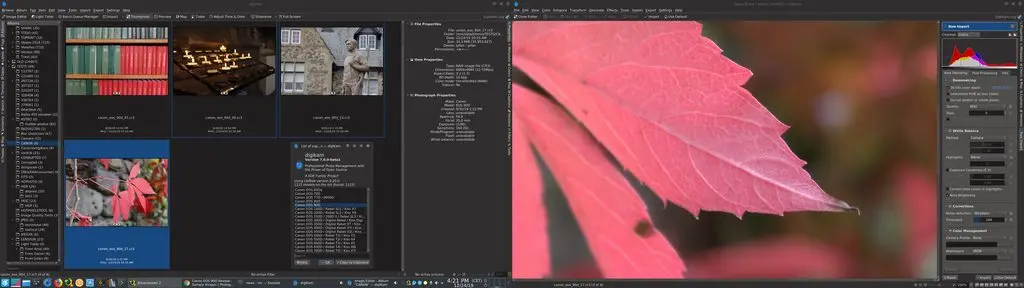

New RAW files Support Including Famous Canon CR3 and Sony A7R4

digiKam try to be the most powerful with all files provided by digital camera. Raw files support is a big challenge. Some applications have been especially created only to support RAW files from camera, as this kind of support is complex, long and hard to maintain in time.

Raw files are not like JPEG. Nothing is standardized, and camera makers are free to change everything inside these digital containers without documentation. Raw files permit to re-invent the existing, to implement hidden features, to cache metadata, to require a powerful computer to process data. When you buy an expensive camera, you must expect that the image provided are seriously pre-processed by the camera firmware and ready to use immediately.

This is true for JPEG, not RAW files. Even if JPEG is not perfect, it’s well standardized and well documented. For Raw, for each new camera release, the formats can change as it depends in-depth on camera sensor data not processed by camera firmware. This require an intensive reverse-engineering that digiKam team cannot support as well. This is why we use the powerful Libraw library to post-process the Raw files on the computer. This library include complex algorithms to support all different Raw file formats.

In this 7.0.0, we use the new version libraw 0.20 which introduce more than 40 new Raw formats, especially the most recent camera models available on the photo market, including the new Canon CR3 format and the Sony A7R4 (61 Mpx!). See the list given below for details:

- Canon: PowerShot G5 X Mark II, G7 X Mark III, SX70 HS, EOS R, EOS RP, EOS 90D, EOS 250D, EOS M6 Mark II, EOS M50, EOS M200

- DJI Mavic Air, Osmo Action

- FujiFilm GFX 100, X-A7, X-Pro3

- GoPro Fusion, HERO5, HERO6, HERO7

- Hasselblad L1D-20c, X1D II 50C

- Leica D-LUX7, Q-P, Q2, V-LUX5, C-Lux / CAM-DC25

- Olympus TG-6, E-M5 Mark III.

- Panasonic DC-FZ1000 II, DC-G90, DC-S1, DC-S1R, DC-TZ95

- PhaseOne IQ4 150MP

- Ricoh GR III

- Sony A7R IV, ILCE-6100, ILCE-6600, RX0 II, RX100 VII

- Zenit M

- and multiple smartphones…

This Libraw version is able to process in totality more than 1100 RAW formats. You can find the complete list in digiKam and Showfoto through the Help/Supported Raw Camera dialog. Thanks to Libraw team to share and maintain this experience.

On The Road To 7.0.0

Next major version 7.0.0 will look promising. The bugzilla entries closed for this release are already impressive and nothing is completed yet, as we plan few beta before next spring, when we publish officially the stable version. It still bugs to fix while this beta campaign and all help will be welcome to stabilize codes.

Thanks to all users for your support and donations, and to all contributors, students, testers who allowed us to improve this release.

digiKam 7.0.0 source code tarball, Linux 32/64 bits AppImage bundles, MacOS package and Windows 32/64 bits installers can be downloaded from this repository. Don’t forget to not use this beta in production yet and thanks in advance for your feedback in Bugzilla.

We which you a Merry Christmas and a Happy new year 2020 using digiKam…